Helm.ai Introduces Helm.ai Driver, Vision-Only Real-Time Path Prediction Neural Network for Urban Driving

Helm.ai Introduces Helm.ai Driver, Vision-Only Real-Time Path Prediction Neural Network for Urban Driving

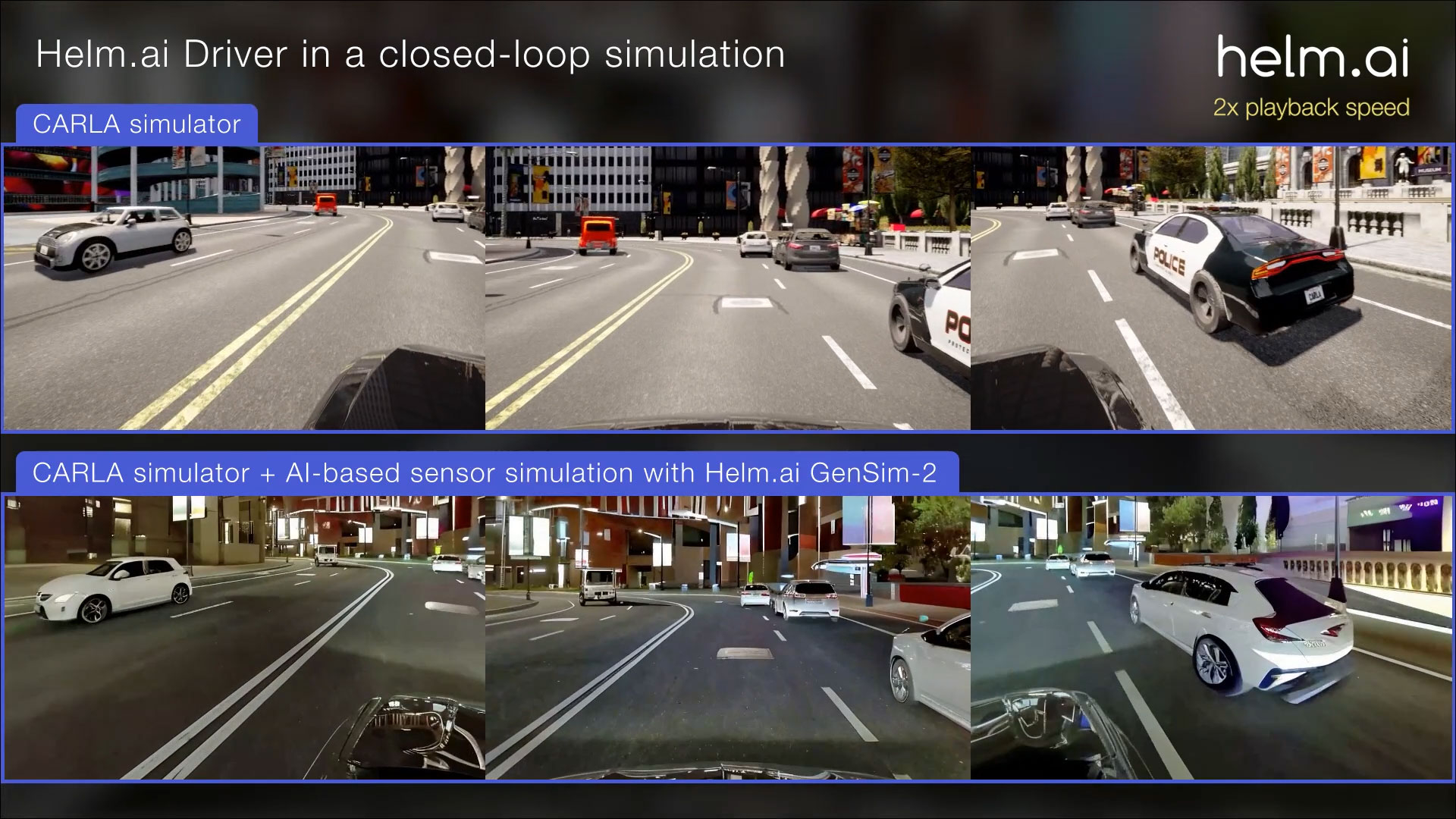

REDWOOD CITY, Calif.--(BUSINESS WIRE)--Helm.ai, a leading provider of advanced AI software for high-end ADAS, autonomous driving, and robotics automation, today introduced Helm.ai Driver, a real-time deep neural network (DNN) transformer based path prediction system for highway and urban Level 2 to Level 4 autonomous driving. The company demonstrates the model’s path prediction capabilities in a closed-loop simulation environment using its proprietary generative AI foundation model, GenSim-2, to re-render realistic sensor data in simulation.

The new model predicts the future path of the self-driving vehicle in real time using only camera-based perception—no HD maps, Lidar, or additional sensors required. It takes as input the output of Helm.ai’s production-grade perception stack, making it directly compatible with highly validated perception software. This modular architecture enables efficient validation and greater interpretability.

Trained on large-scale real-world data using Helm.ai’s proprietary Deep Teaching™ methodology, the path prediction model exhibits robust, human driver-like behaviors in complex urban driving scenarios—including intersections, turns, obstacle avoidance, passing maneuvers, and response to vehicle cut-ins. Notably, these are emergent behaviors from end-to-end learning, not explicitly programmed or tuned into the system.

To demonstrate the model’s path prediction capabilities in a realistic, dynamic environment, Helm.ai deployed it in a closed-loop simulation using the open-source CARLA platform (see attached video). In this setting, Helm.ai Driver continuously responds to its environment, analogously to driving in the real world. Additionally, GenSim-2 re-rendered the simulated scenes to produce realistic camera outputs that closely resemble real-world visuals.

“We’re excited to showcase real-time path prediction for urban driving with Helm.ai Driver, based on our proprietary transformer DNN architecture that requires only vision-based perception as input,” said Vladislav Voroninski, Helm.ai’s CEO and founder. “By training on real-world data, we developed an advanced path prediction system which mimics the sophisticated behaviors of human drivers, learning end-to-end without any explicitly defined rules. Importantly, our urban path prediction for L2 through L4 is compatible with our production-grade surround-view vision perception stack. By further validating Helm.ai Driver in a closed-loop simulator, and combining with our generative AI-based sensor simulation, we’re enabling safer and more scalable development of autonomous driving systems.”

Helm.ai’s foundation models for path prediction and generative sensor simulation are key building blocks of its AI-first approach to autonomous driving. The company continues to deliver models that generalize across vehicle platforms, geographies, and driving conditions.

About Helm.ai

Helm.ai develops next-generation AI software for ADAS, autonomous driving, and robotics automation. Founded in 2016 and headquartered in Redwood City, CA, the company reimagines AI software development to make scalable autonomous driving a reality. Helm.ai offers full-stack real-time AI solutions, including deep neural networks for highway and urban driving, end-to-end autonomous systems, and development and validation tools powered by Deep Teaching™ and generative AI. The company collaborates with global automakers on production-bound projects. For more information on Helm.ai, including products, SDK, and career opportunities, visit https://helm.ai or follow Helm.ai on LinkedIn.